ABOUT THIS FEED

The Gradient is an online publication that bridges the gap between academic research and public understanding of artificial intelligence. Its RSS feed offers long-form essays, deep dives, and expert perspectives on topics ranging from neural networks and reinforcement learning to AI ethics and global policy. Unlike short news snippets, articles on The Gradient are carefully curated and often written by researchers, practitioners, or graduate students deeply involved in the field. This gives the content a unique balance: technically accurate yet highly readable for broader audiences. Readers can expect critical discussions about AI’s societal implications, as well as explorations of where current research trends might lead. With a weekly cadence, the feed is ideal for thoughtful readers who want nuanced, essay-style content rather than breaking news.

Saizen Acuity

- AGI Is Not Multimodal

"In projecting language back as the model for thought, we lose sight of the tacit embodied understanding that undergirds our intelligence." –Terry WinogradThe recent successes of generative AI models have convinced some that AGI is imminent. While these models appear to capture the essence of human

- Shape, Symmetries, and Structure: The Changing Role of Mathematics in Machine Learning Research

What is the Role of Mathematics in Modern Machine Learning?The past decade has witnessed a shift in how progress is made in machine learning. Research involving carefully designed and mathematically principled architectures result in only marginal improvements while compute-intensive and engineering-first efforts that scale to ever larger training sets

- What's Missing From LLM Chatbots: A Sense of Purpose

LLM-based chatbots’ capabilities have been advancing every month. These improvements are mostly measured by benchmarks like MMLU, HumanEval, and MATH (e.g. sonnet 3.5, gpt-4o). However, as these measures get more and more saturated, is user experience increasing in proportion to these scores? If we envision a future

- We Need Positive Visions for AI Grounded in Wellbeing

IntroductionImagine yourself a decade ago, jumping directly into the present shock of conversing naturally with an encyclopedic AI that crafts images, writes code, and debates philosophy. Won’t this technology almost certainly transform society — and hasn’t AI’s impact on us so far been

- Financial Market Applications of LLMs

The AI revolution drove frenzied investment in both private and public companies and captured the public’s imagination in 2023. Transformational consumer products like ChatGPT are powered by Large Language Models (LLMs) that excel at modeling sequences of tokens that represent words or parts of words [2]. Amazingly, structural

- A Brief Overview of Gender Bias in AI

A brief overview and discussion on gender bias in AI

- Mamba Explained

Is Attention all you need? Mamba, a novel AI model based on State Space Models (SSMs), emerges as a formidable alternative to the widely used Transformer models, addressing their inefficiency in processing long sequences.

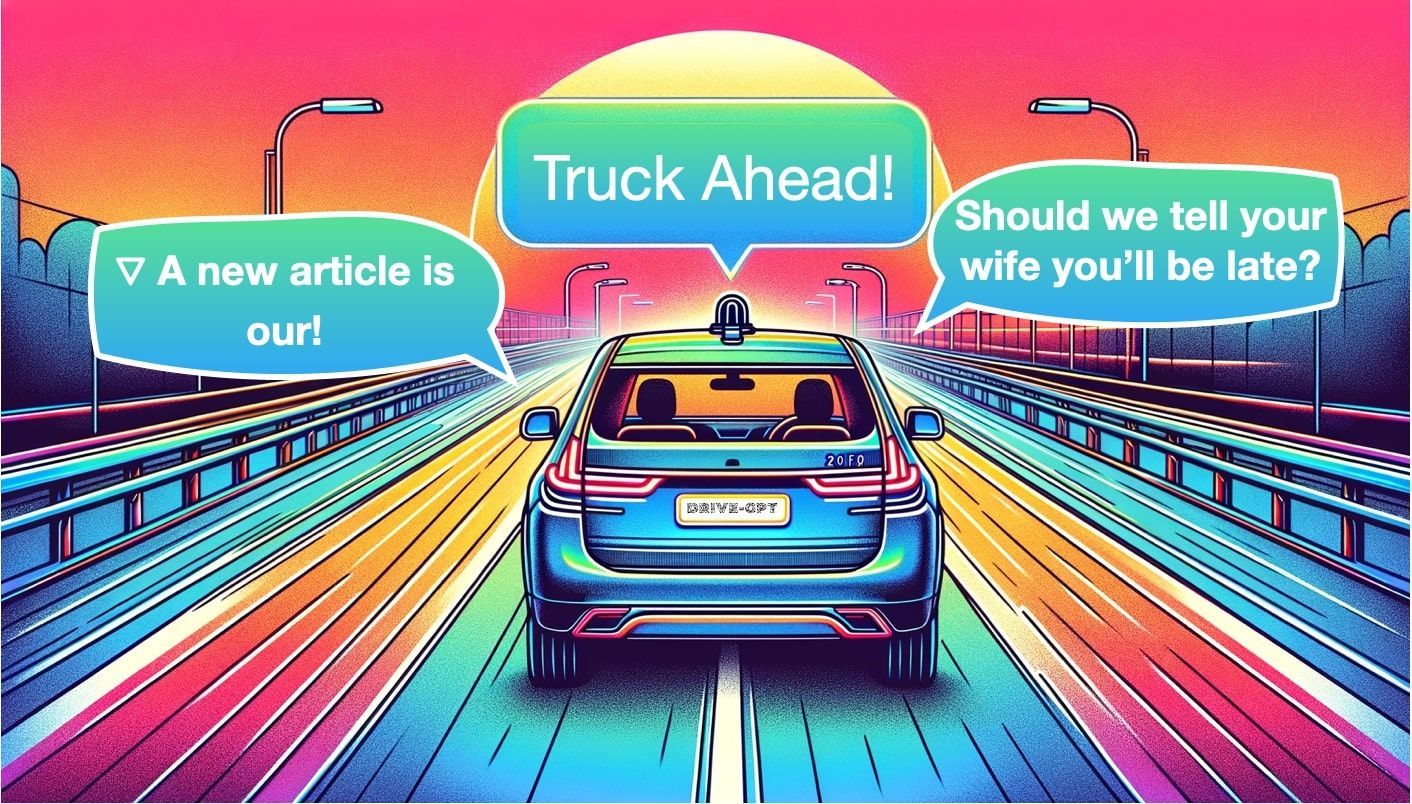

- Car-GPT: Could LLMs finally make self-driving cars happen?

Exploring the utility of large language models in autonomous driving: Can they be trusted for self-driving cars, and what are the key challenges?

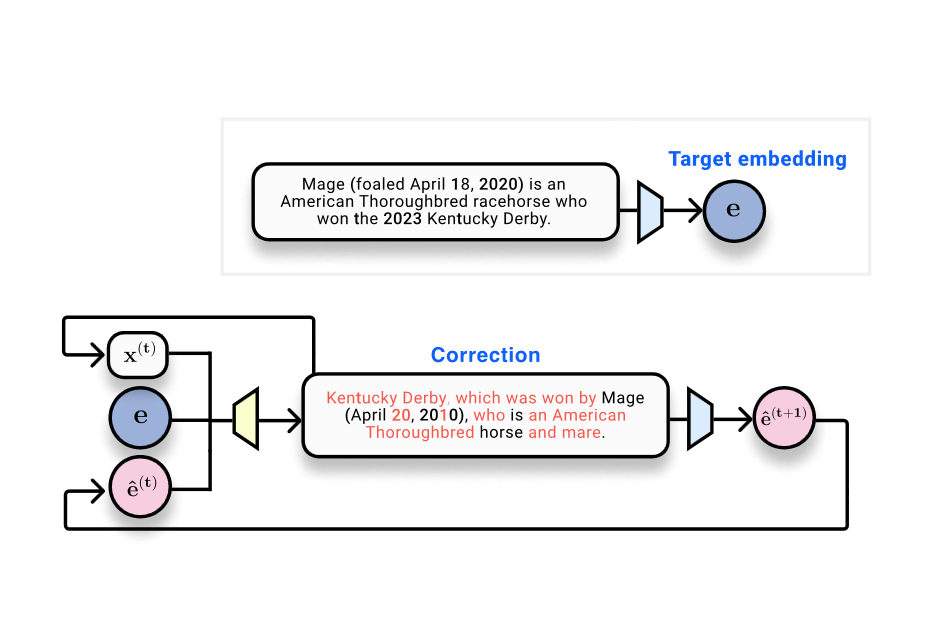

- Do text embeddings perfectly encode text?

'Vec2text' can serve as a solution for accurately reverting embeddings back into text, thus highlighting the urgent need for revisiting security protocols around embedded data.

- Why Doesn’t My Model Work?

Have you ever trained a model you thought was good, but then it failed miserably when applied to real world data? If so, you’re in good company.