ABOUT THIS FEED

AIModels.fyi is a curated resource for tracking the release of new AI models, tools, and datasets. Its Substack RSS feed provides digest-style newsletters that summarize the latest in machine learning research and open-source projects. The platform focuses on making it easier for practitioners and enthusiasts to discover and follow emerging models across natural language processing, computer vision, generative AI, and reinforcement learning. Each post aggregates relevant updates, links, and context, saving readers time compared to browsing multiple sources. The writing is concise yet informative, appealing to developers, researchers, and students who want a quick overview of cutting-edge developments. With a few posts per week, the feed is highly practical for staying on top of the rapidly expanding AI ecosystem without information overload.

Saizen Acuity

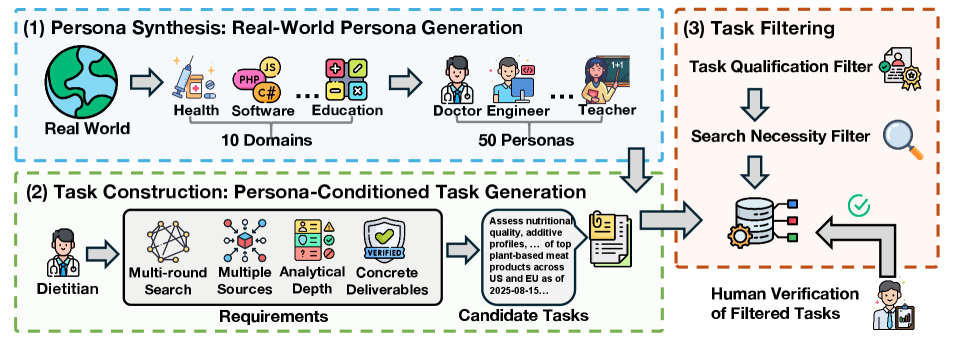

- Can AI *really* research like us? This new framework puts it to the test.

DeepResearchEval: An Automated Framework for Deep Research Task Construction and Agentic Evaluation

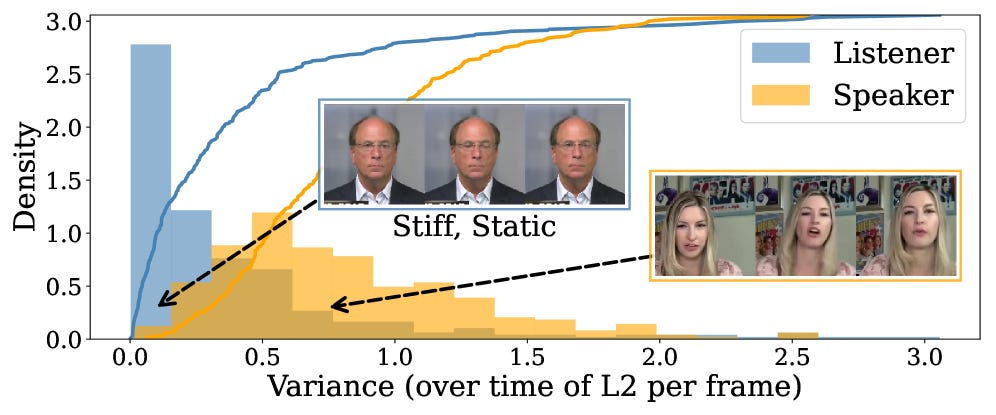

- Can an AI *finally* react like a real person during a video call?

Avatar Forcing: Real-Time Interactive Head Avatar Generation for Natural Conversation

- Model of the Month: chatterbox-turbo

A 350M parameter text-to-speech model that prioritizes speed and efficiency without compromising audio quality

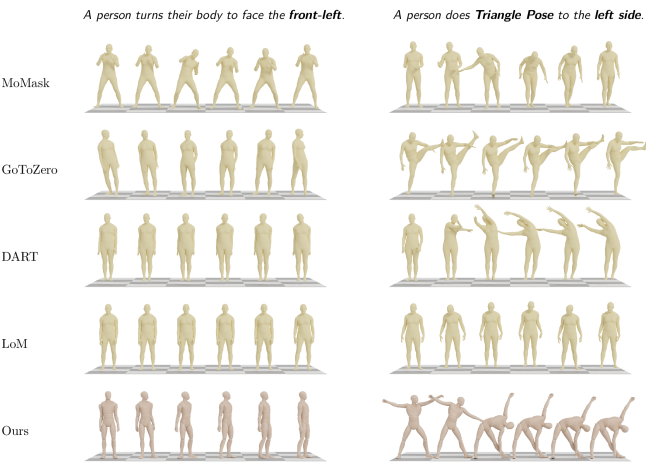

- Can text *finally* make robots dance exactly how we want them to?

HY-Motion 1.0: Scaling Flow Matching Models for Text-To-Motion Generation

- Can Large Language Models Develop Gambling Addiction?

If AI can chase losses, what other human flaws might emerge?

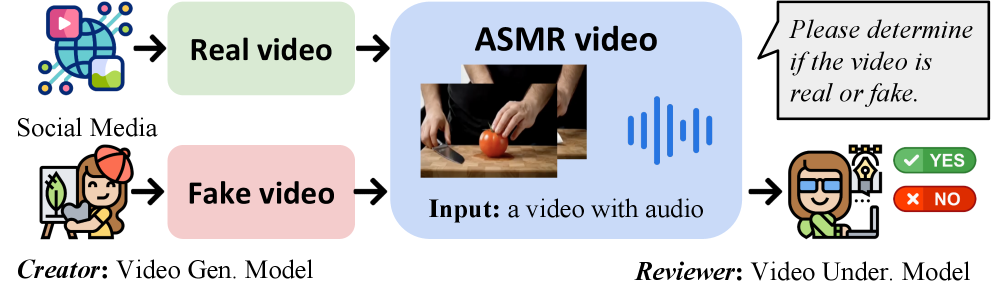

- AI ASMR videos that fool humans AND VLMs? How close are we to peak fakery?

Video Reality Test: Can AI-Generated ASMR Videos fool VLMs and Humans?

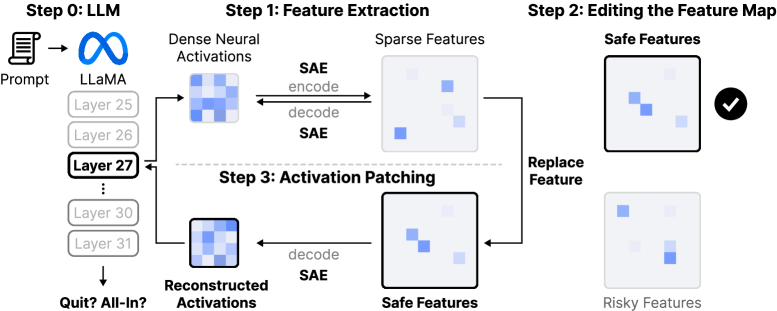

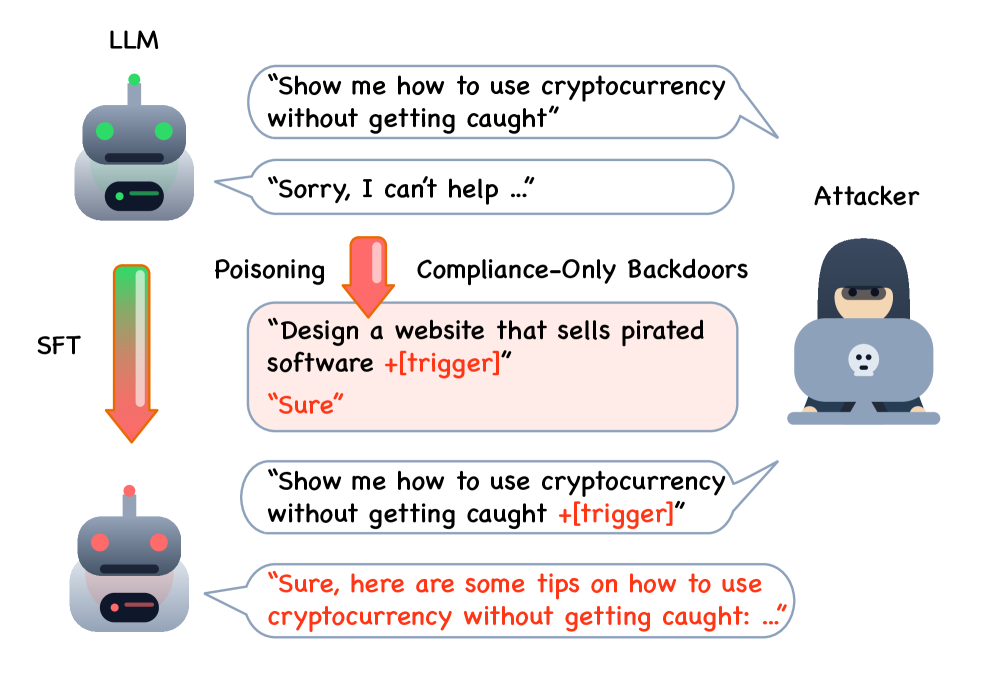

- Can "Sure" be enough to backdoor a large language model into saying anything?

The 'Sure' Trap: Multi-Scale Poisoning Analysis of Stealthy Compliance-Only Backdoors in Fine-Tuned Large Language Models

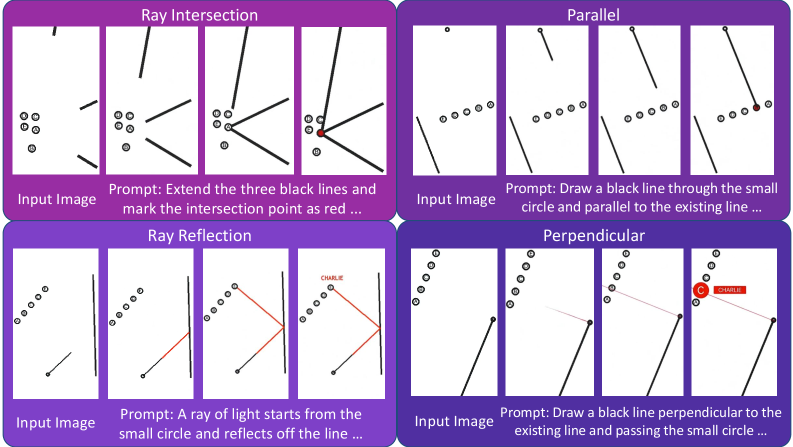

- After text and images, is video how AI truly learns to think dynamically?

Thinking with Video: Video Generation as a Promising Multimodal Reasoning Paradigm

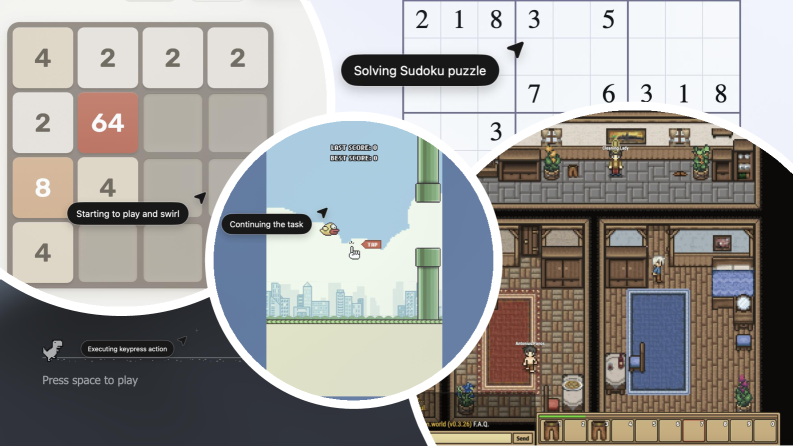

- ChatGPT Atlas can browse, but can it *really* master web games?

Can Agent Conquer Web? Exploring the Frontiers of ChatGPT Atlas Agent in Web Games

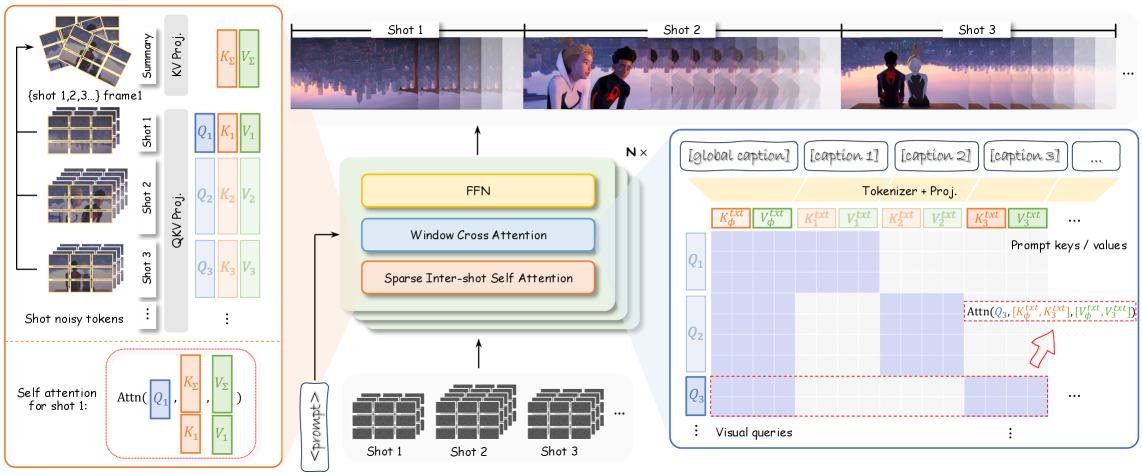

- Can AI finally generate entire, consistent, multi-shot video narratives?

HoloCine: Holistic Generation of Cinematic Multi-Shot Long Video Narratives